Allion Labs | Franck Chen

In the last article, we discussed common consumer issues encountered in smart TVs and Allion’s capabilities to assist manufacturers. This time, we will delve deeper into the specifics of test measurements and data.

Reviewing TVs with Voice Assistants

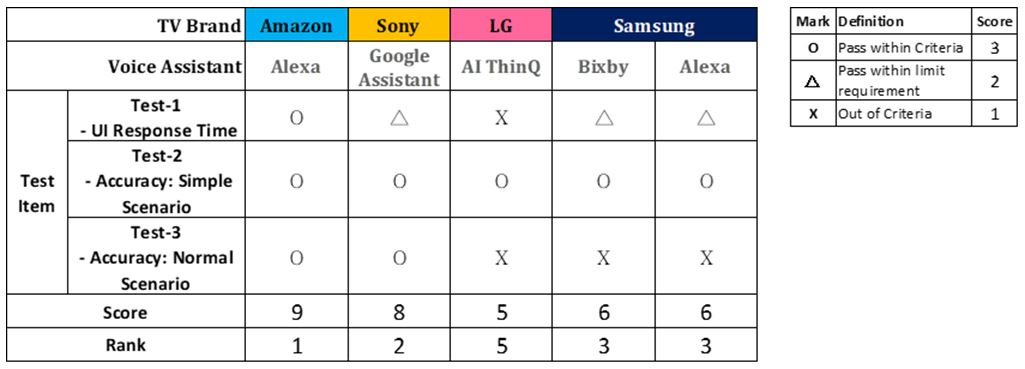

We tested 4 models of smart TVs with voice assistants produced in 2021 by different brands.

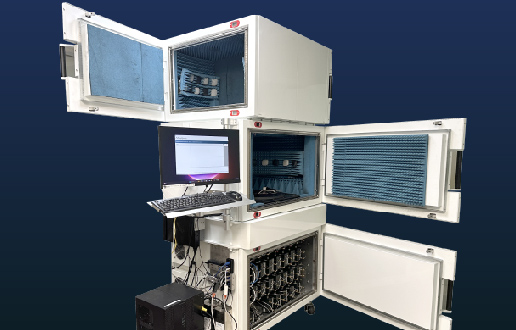

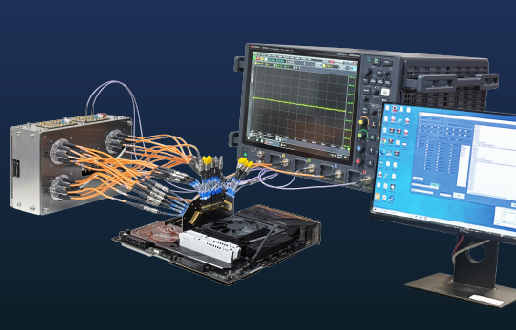

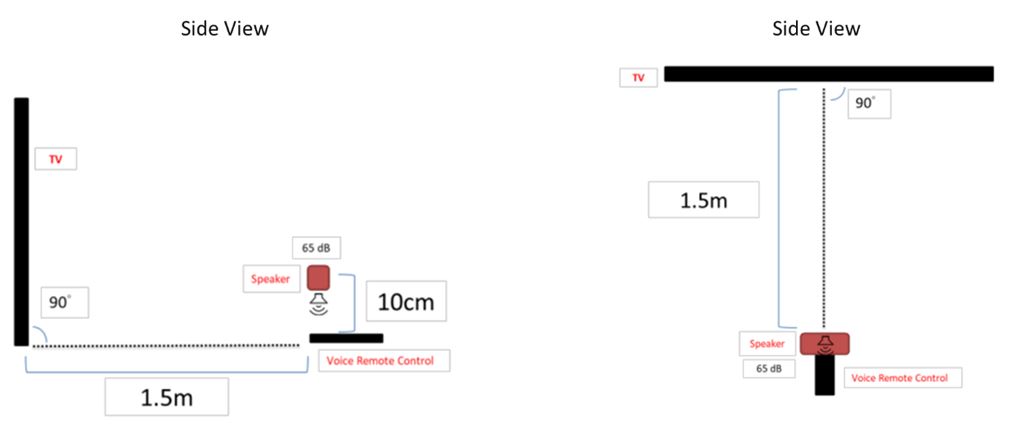

Environmental Parameters and Setup

- Wireless Environment: Open

- Voice Input Language: English

- TV Network: Wired network

- Relative Position: Shown in the figure below

Test 1: Voice Assistant Response Speed and Stability Test

Test Scenario

Step 1: Press the [Voice] button in the Home screen of the TV home page.

Step 2: The TV displays the voice assistant interface.

Test Parameters

Time Period from Step 1 to Step 2.

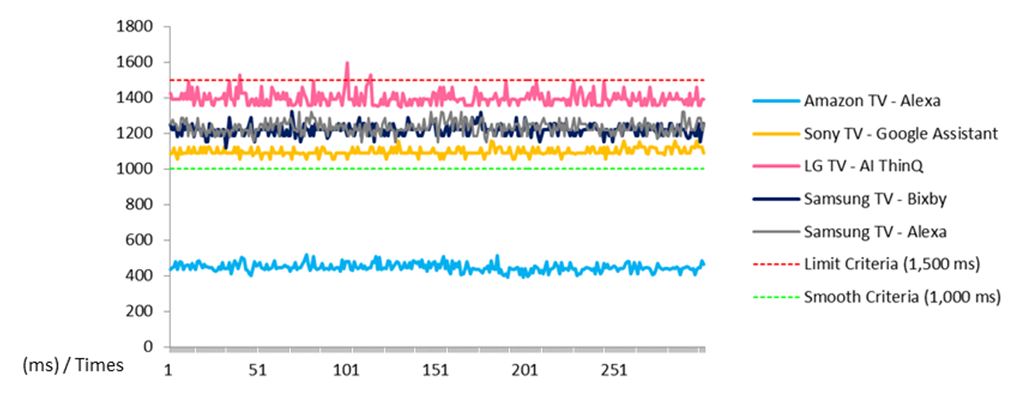

Test Results (300 Attempts)

Result Analysis

- Average Response Speed

Best Performance: Amazon TV-Alexa

It is the only one that is below the recommended response sensitivity value (1,000 ms), and the overall response including UI presentation is quite sensitive and intuitive.

Worst Performance: LG TV-AI ThinQ

Many data are close to or above 1,500 ms, reaching a critical value to make people notice a little delay, and its overall fluency needs to be improved. - Comparison of the Same Voice Assistant Working on Different TV Operating Systems

Take Alexa for example, the performance of Samsung TV (1,234 ms) is not reaching Amazon (446 ms), hence it is concluded that the TV’s function and design affect the overall response speed. Consumers should pay more attention when purchasing as the voice assistant doesn’t perform the same on different operating systems.

Test 2: Voice Assistant Execution Rate and Accuracy Rate Test-Simple Scenario

Test Scenario

Step 1: Press the [Voice] button to activate the voice assistant in the Home Screen of the TV home page.

Step 2: Use the voice command “Go to YouTube” and wait for 10 seconds.

Step 3: Go back to the Home Screen by pressing the [Home] key.

Test Parameters

Step 1: Whether the voice assistant activates correctly.

Step 2: Whether YouTube can be activated correctly by the voice assistant.

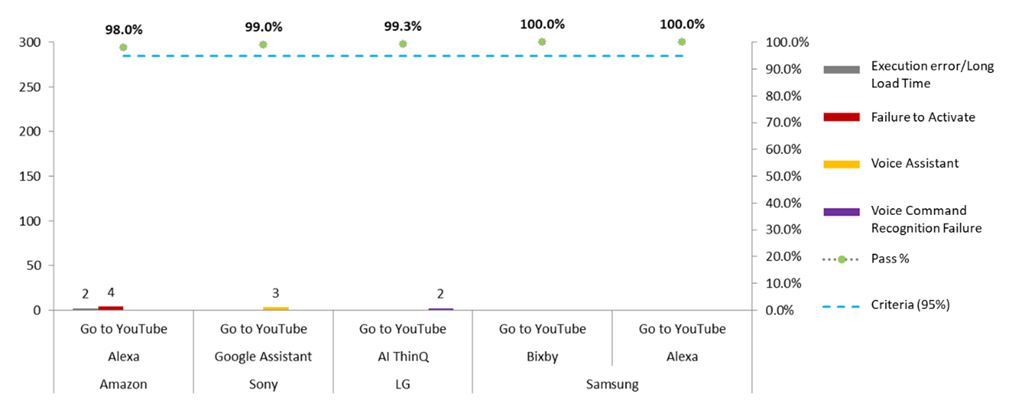

Test Results (300 Attempts)

Result Analysis

- Best Performance: The execution rate and accuracy rate of the voice assistants in each group met the requirements of more than 95%. Among them, Samsung TV-Bixby/Alexa performed the best without any errors.

- Worst Performance: Amazon TV-Alexa had 6 errors and the voice assistant didn’t activate 4 times, it’s a bad user experience for consumers.

It is difficult to find this potential problem through general manual inspection, let alone obtain key logs for analysis and improvement!! - Comparison of the Same Voice Assistant on Different Operating Systems

- Take Alexa for example, the performance of Samsung TV is better than Amazon TV. Concerning the result of Test 1, the same voice assistant doesn’t perform the same on different TV systems.

- Factors that may affect the overall performance of the voice assistant include the radio reception capability of each remote control, voice data transmission capability, TV system/UI design, anti-interference capability…etc. Manufacturers cannot only rely on the ability of voice assistants when developing but test it with the actual application situation on the TV!

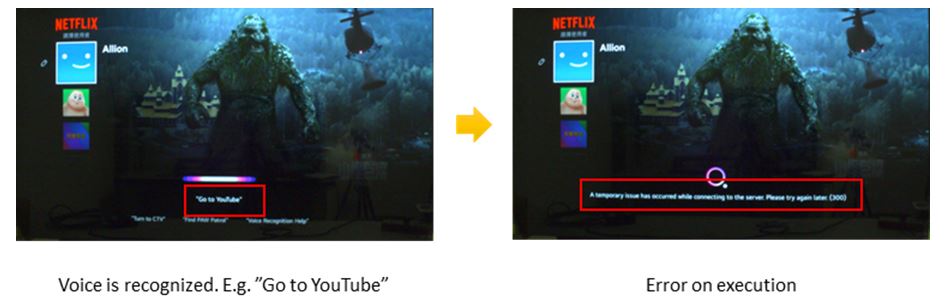

Issue Summary

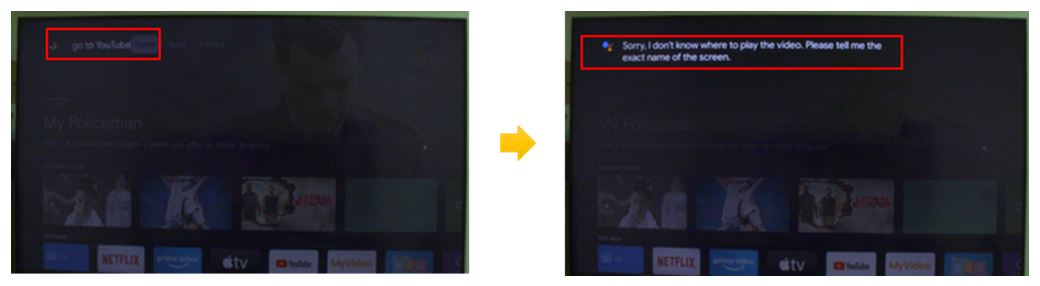

Sony TV-Google Assistant

“Go to YouTube” is recognized many times, but it doesn’t know how to proceed next.

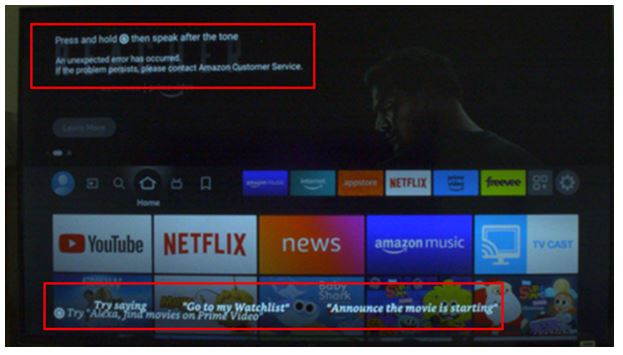

Amazon TV-Alexa

An execution error occurs after the voice assistant is activated.

Through the verification of the simplest scenario above, some problems and differences began to arise. The following is a further test through a more complex user scenario, and the results are unexpected!

Test 3: Voice Assistant Execution Rate and Accuracy Test – Normal Situation

Test Scenario

Step 1: Shut down the TV and wait 5 minutes.

Step 2: Turn on the TV and wait 30 seconds.

Step 3: Press the Voice button and wait for 10 seconds after voice inputting “Open Netflix”

Step 4: Press the Voice button, voice input “Go to YouTube” and wait 30 seconds. → Go to Step 1.

Test Parameters

Step 3: The voice assistant activates normally, and Netflix activates correctly via the voice assistant → First Goal

Step 4: The voice assistant activates normally, and YouTube activates correctly via the voice assistant → Second Goal

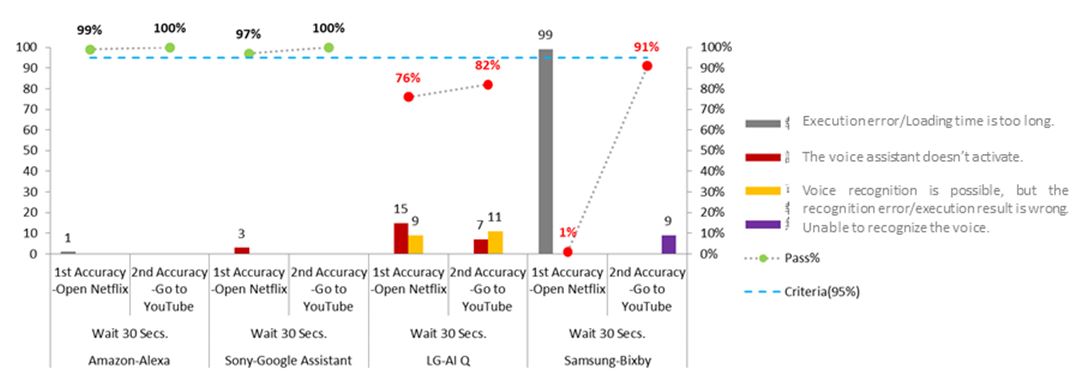

Test Result (100 Attempts)

Result Analysis

Result Analysis

- Best performance: Amazon TV-Alexa, Sony TV-Google Assistant

The two perform the same. After booting up, the accuracy rate of the first voice execution has reached the standard, while the second voice execution does not even have any errors! - Worst performance: LG TV-AI ThinQ, Samsung TV-Bixby

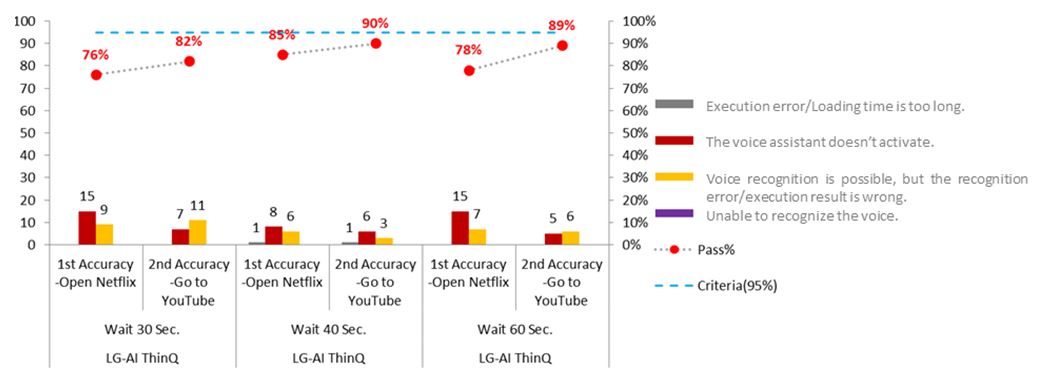

LG-AI ThinQ

The correct execution rate of the first voice command is only 76%. Although the accuracy rate of the second voice command has increased to 82%, it is still a long way from the standard of 95%. Aside from the main issue of the voice assistant not activating, the issue of wrong execution when the voice is recognized occurs many times.

Samsung TV-Bixby

Samsung TV-Bixby

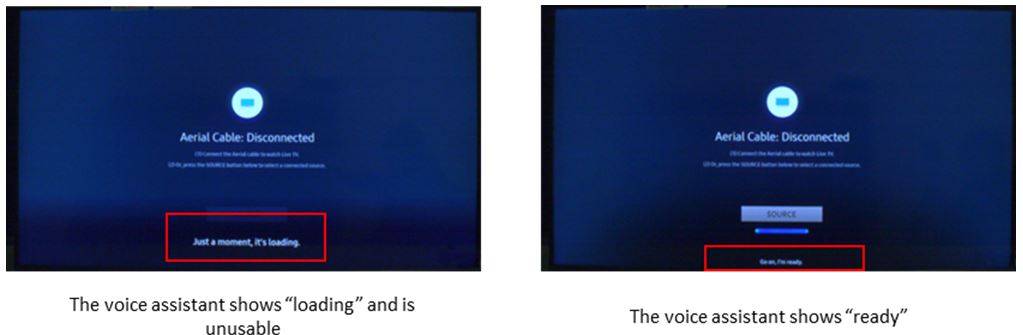

The accuracy rate of the first voice command is only 1%. The main reason is that even after the TV is turned on for 30 seconds when the voice assistant activates, it still displays “loading” and cannot be used (bottom left picture), resulting in the first Voice commands almost all failing. Sometimes even though the “Go on, I’m ready” information interface (bottom right picture) is displayed, there is no recognition function!

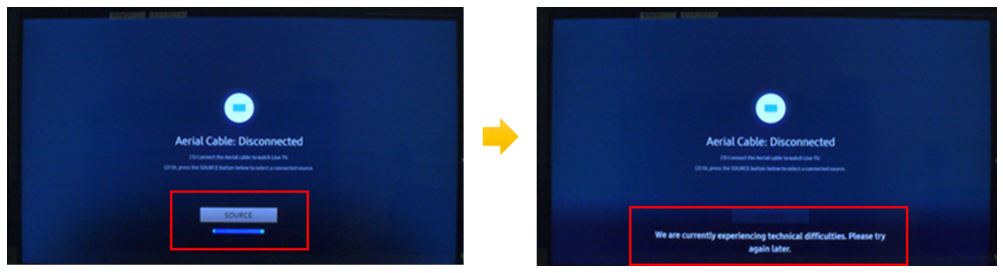

The execution accuracy rate of the second voice command has been greatly improved to 91%, but because the voice assistant has activated many times (bottom left picture), it cannot be recognized or executed (bottom right picture), resulting in overall execution accuracy rate be only 91% lower than the requirement of more than 95%.

The execution accuracy rate of the second voice command has been greatly improved to 91%, but because the voice assistant has activated many times (bottom left picture), it cannot be recognized or executed (bottom right picture), resulting in overall execution accuracy rate be only 91% lower than the requirement of more than 95%.

Test Summary and Current Ranking

The current ranking is temporarily led by Amazon TV-Alexa, while LG-AI ThinQ is behind. As to what effects and rank will be under complicated scenarios (interference of Bluetooth and wireless), we will discuss in other articles.

With the cases above, it is evident that for the interception of precise measurement and probabilistic serious problems, in addition to the need to make good use of automation tools, the design of the scenario is also a crucial part, and both are indispensable.

Allion would like to remind clients that the voice assistant is an important aspect of smart TV. We can develop automated tools and design key scenarios with experiences, which do more with less effort to strictly control the quality of your TV and enhance market competitiveness!

Supplementary Test Data

In Step 2 of Test 3, extend the wait time to 40 and 60 seconds to verify by shutting down the TV.

LG-AI ThinQ

Result Analysis

After extending the waiting time after booting to 40 seconds and 60 seconds, the overall accuracy rate of the first or second voice command has not improved significantly and is still lower than the required 95%. Overall, the process of turning off/on the TV affects the functionality of the voice assistant.

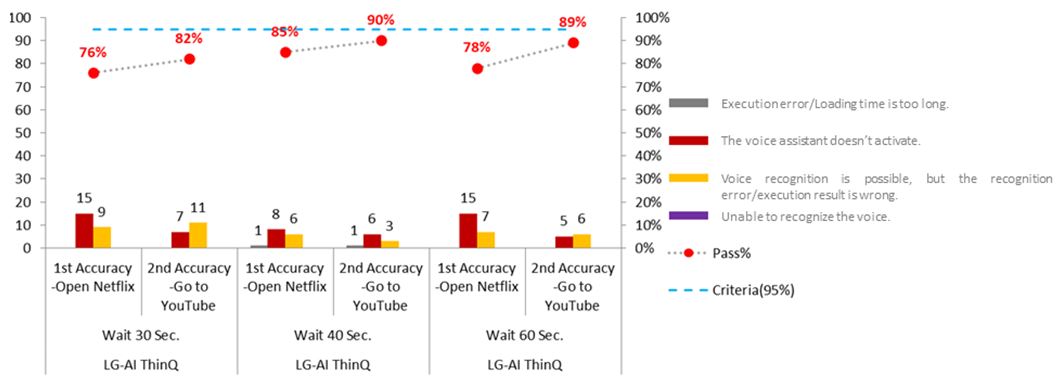

Samsung TV-Bixby, Alexa

Result Analysis

Extends the waiting time to 40 seconds after booting.

The First Voice Command

The issue of the voice assistant taking too long to load has been greatly reduced, but the accompanying problem is that the voice assistant cannot recognize the voice, resulting in an overall accuracy rate of 0%.

The Second Voice Command

The failure of the voice assistant to be recognized has been greatly reduced, but the overall accuracy rate of 89% is still lower than the required standard (95%).

Extends the waiting time to 60 seconds after booting.

The First Voice Command

The issue of the voice assistant taking too long to load is accompanied by the voice assistant being unable to recognize the voice, resulting in an overall accuracy rate of 0%.

The Second Voice Command

There are only 2 remaining problems that the voice assistant cannot recognize, and the overall accuracy rate has increased to 97%, meeting the standard (95%).

Extend the waiting time to 60 seconds under the scenario of Samsung-Alexa.

The First Voice Command

There was a problem that the voice assistant could not be activated or could perform voice recognition, but the execution result was wrong, resulting in an overall accuracy rate of 0%.

The Second Voice Command

No issue occurs, and the accuracy rate of voice commands increases to 100%.

From the above verification, it is concluded that there is an issue on the first attempt of the voice assistant when turning on/off Samsung TV (no matter using Bixby or Alexa). In comparison to Amazon TV-Alexa, the same voice assistant doesn’t perform the same on different TV operating systems. Whether you are a voice assistant manufacturer, a TV manufacturer, a distributor, or even a consumer, you should pay attention when purchasing.

Take the Next Step

With over 30 years of IT testing experience, Allion Labs provides certification services, customized test services, professional market evaluations, and competitive product analysis reports for customers to improve product performance and user experience. Our company helps major manufacturers build brand reputations and take advantage of market opportunities in fierce product competition.

If you have any questions regarding this article or consulting, please feel free to contact us: service@allion.com, or click the here to contact Allion easily!